Load a file in HDFS into HBase using Pig

Suppose we have a file in HDFS we want to load into a HBase table. We can easily do that using Pig. This can be done in two steps:

- Create the table in HBase.

- Run the Pig script to load the file into the newly created table.

Let's consider we want to move the file u.user located in /user/ubuntu/ml-100k/ to HBase. This file has 5 columns and is pipe (|) delimited.

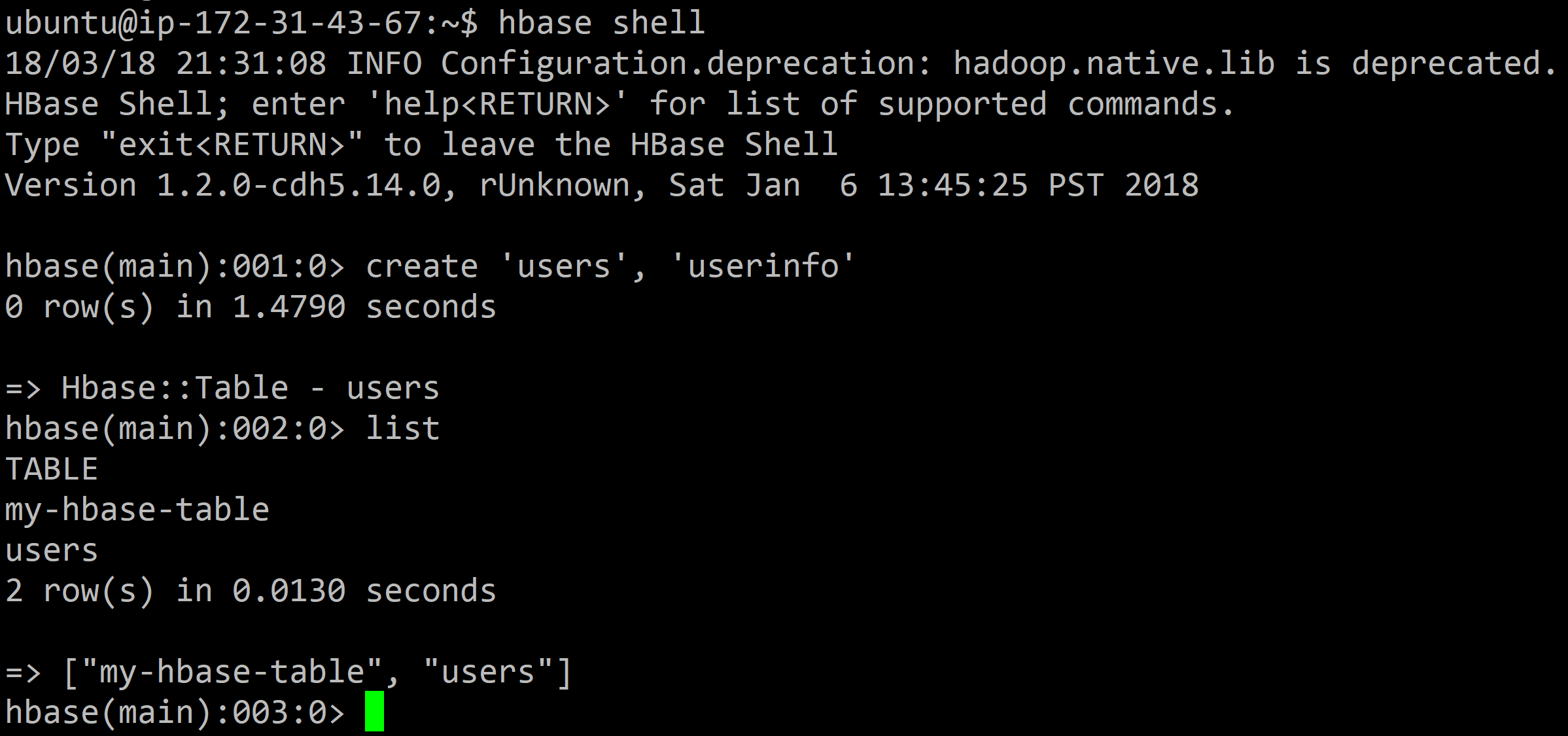

In the Terminal, enter the HBase shell:

hbase shell

Then create a new table users with one column family userinfo using the following command:

create 'users', 'userinfo'

Exit the HBase shell

exit

and run the Pig shell/Grunt:

pig

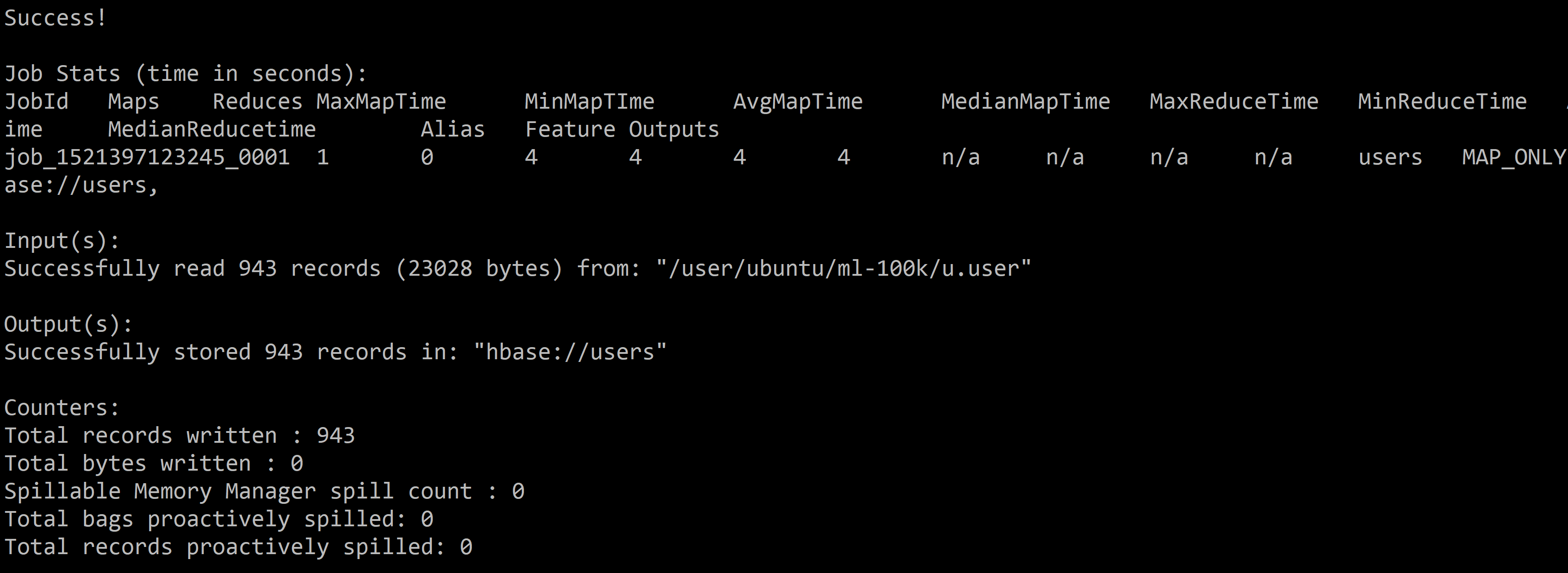

In the Pig shell, run the following command to load the file:

users = LOAD '/user/ubuntu/ml-100k/u.user'

USING PigStorage('|')

AS (userID:int, age:int, gender:chararray, occupation:chararray, zip:int);

Then we can store the relation in the HBase table. By default, the first column of the relation will be the row key of the HBase table.

STORE users INTO 'hbase://users'

USING org.apache.pig.backend.hadoop.hbase.HBaseStorage (

'userinfo:age,userinfo:gender,userinfo:occupation,userinfo:zip');

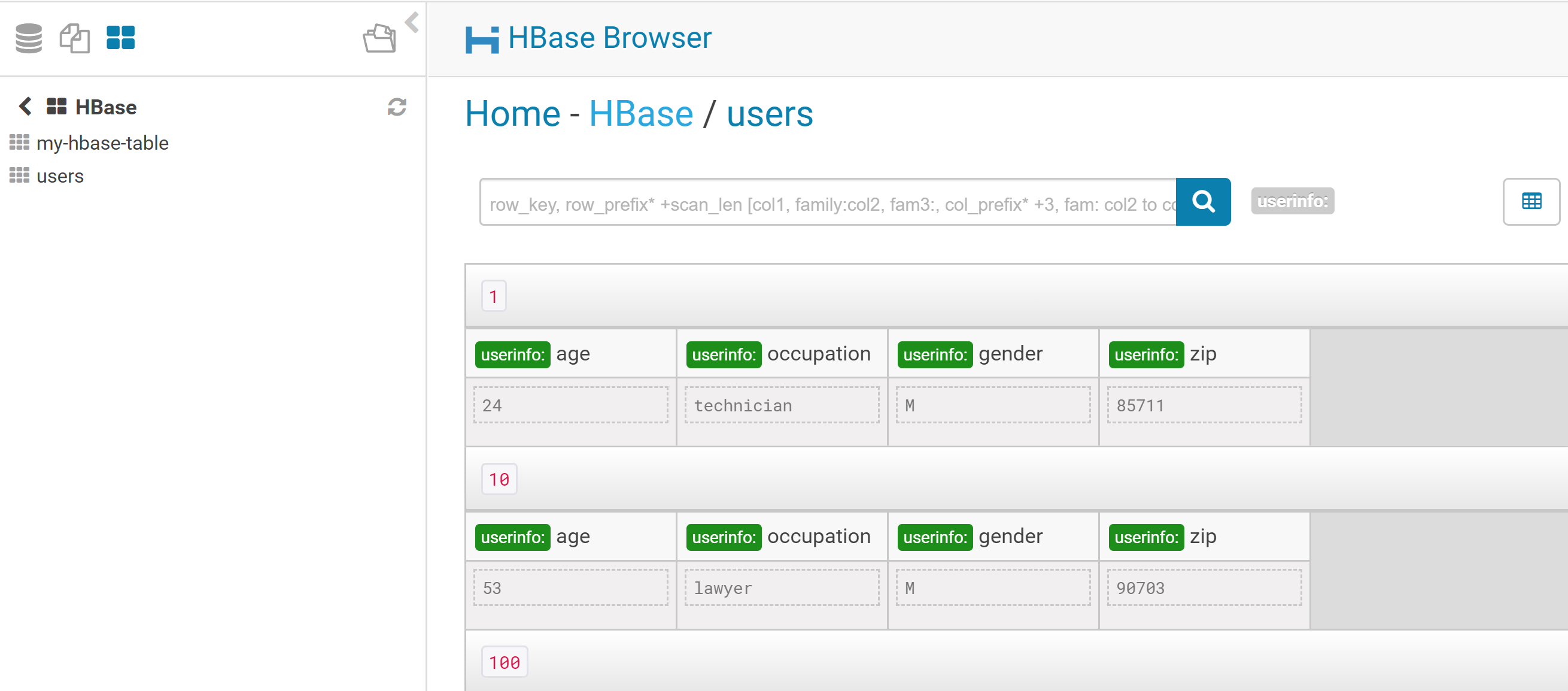

We can now browse the table in HUE using the HBase browser: